Recently I’ve been working on an Ansible upgrade project that included building out an Ansible Automation Platform installation and upgrading legacy ansible code to modern standards. The ansible code that we were working with had been written mostly targeting Enterprise Linux versions 6 and 7 and was using pre ansible version 2.9 coding standards.

The newer versions of Ansible and Ansible Automation Platform utilise Execution Environments to run the ansible engine against a host. An Execution Environment is a container built with Ansible dependencies, Python libraries and Ansible Collections baked in.

On top of the legacy Ansible code that I was working with, the codebase does a lot of “magic” configuration for setting things up across the environment, so I had to make sure that everything worked like it did previously. I tested a few of the off-the-shelf execution environments, none of which worked for what we needed it for.

In this post I wanted to detail a quick tutorial on building a custom execution environment for running your Ansible code.

I’m using Fedora Linux 39 to set up a development environment, but most Linux distributions should follow similar steps.

From the command line, install the required dependencies. As execution environments are containers, we need a container runtime and for that we’ll use Podman. We also need some build tools.

$ sudo dnf install podman python3-pipNow to install the Ansible dependencies.

$ python3 -m pip install ansible-navigator ansible-builderAnsible navigator is the new interface to running Ansible and is great for testing out different execution environments and your ansible code as you’re developing. I briefly demonstrated using Ansible navigator in my article about using Ansible to configure Linux servers. You need the tools in Ansible builder to create the container images.

If you’ve ever built Docker containers before, the steps for EEs are very similar just with the Ansible builder wrapper. Create a folder to store your files.

$ mkdir custom-ee && cd custom-eeThe main file we need to create is the execution-environment.yml file, which Ansible builder uses to build the image.

---

version: 3

images:

base_image:

name: quay.io/centos/centos:stream9

dependencies:

python_interpreter:

package_system: python3.11

python_path: /usr/bin/python3.11

ansible_core:

package_pip: ansible-core>=2.15

ansible_runner:

package_pip: ansible-runner

galaxy: requirements.yml

system: bindep.txt

python: |

netaddr

receptorctl

additional_build_steps:

append_base:

- RUN $PYCMD -m pip install -U pip

append_final:

- COPY --from=quay.io/ansible/receptor:devel /usr/bin/receptor /usr/bin/receptor

- RUN mkdir -p /var/run/receptor

- RUN git lfs install --system

- RUN alternatives --install /usr/bin/python python /usr/bin/python3.11 311The main parts of the file are fairly self-explanatory, but from the top:

- We’re using version 3 of the ansible builder spec.

- The base container image we’re building from is CentOS stream 9 pulled from Quay.io.

- We want to use Python 3.11 inside the container.

- We want an Ansible core version higher than 2.15.

In the dependencies section, we can specify additional software our image requires. The galaxy entry is Ansible collections from the Galaxy repository. System is the software installed using DNF on a Linux system. And Python is the Python dependencies we need since Ansible is written in Python and it requires certain libraries to be available depending on what your requirements.

The Galaxy collections are being defined in an external file called requirements.yml which is in the working directory with the execution-environment.yml file. It’s simply a YAML file with the following entries:

---

collections:

- name: ansible.posix

- name: ansible.utils

- name: ansible.netcommon

- name: community.generalMy project requires the ansible.posix, ansible.utils and ansible.netcommon collections, and the community.general collection. Previously, all of these collections would have been part of the ansible codebase and installed when you install Ansible, however the Ansible project has decided to split these out into collections, making the Ansible core smaller and more modular. You might not need these exact collections, or you might require different collections depending on your environment, so check out the Ansible documentation.

Next is the bindep.txt file for the system binary dependencies. These are installed in our image, which is CentOS, using DNF.

epel-release [platform:rpm]

python3.11-devel [platform:rpm]

python3-libselinux [platform:rpm]

python3-libsemanage [platform:rpm]

python3-policycoreutils [platform:rpm]

sshpass [platform:rpm]

rsync [platform:rpm]

git-core [platform:rpm]

git-lfs [platform:rpm]Again, you might require different dependencies, so check the documentation for the Ansible modules you’re using.

Under the Python section, I’ve defined the Python dependencies directly rather than using a seperate file. If you need a separate file it’s called requirements.txt.

netaddr

receptorctlNetaddr is the Python library for working with IP Addresses, which the ansible codebase I was working with needed, and receptorctl is a Python library for working with Receptor, network service mesh implementation that Ansible uses to distribute work across execution nodes.

With all of that definied, we can build the image.

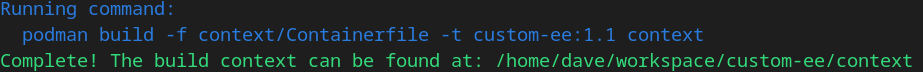

ansible-builder build --tag=custom-ee:1.1The custom-ee tag is the name of the image that we’ll use to call from Ansible. The ansible-builder command runs Podman to build the container image, The build should take a few minutes. If everything went according to plan, you should see a success message.

Because the images are just standard Podman images, you can run the podman images command to see it. You should see the output display ‘localhost/custom-ee’ or whatever you tagged your image with.

$ podman images

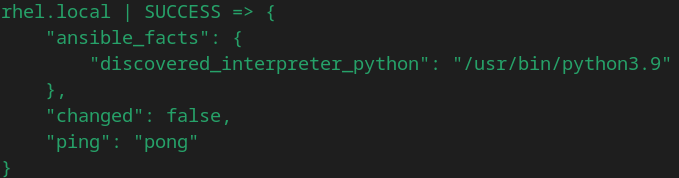

If the build was successful and the image is available, you can test the image with Ansible navigator. I’m going to test with a minimal RHEL 9 installation that I have running. In the ansible-navigator command, you can specify the –eei flag to change the EE from the default, or you can add a directive in an ansible-navigator.yml file in your ansible project, such as the following:

ansible-navigator:

execution-environment:

image: localhost/custom-ee:1.1

pull:

policy: missing

playbook-artifact:

enable: falseIf you’re using Ansible Automation Platform you can pull the EE from a container registry or Private Automation Hub and specify which EE to use in your Templates.

ansible-navigator run web.yml -m stdout --eei localhost/custom-ee:1.1

You can also inspect the image with podman inspect with the image hash from the podman images command.

$ podman inspect 8e53f19f86e4

Once you’ve got the EE working how you need it to you can push it to either a public or private container registry for use in your environment.