Previously I wrote about using Ansible to manage the configuration of Linux servers. I love using Ansible and use it almost every day, however in a large Enterprise environment with multiple users and a lot of Ansible roles and playbooks, sometimes using Ansible on its own becomes difficult to maintain.

In this post I’m going to run through configuring Oracle Linux Automation Manager. Oracle’s Automation Manager is essentially a rebranded fork of Ansible Automation Platform and provides a web user interface to easily manage your Ansible deployments and inventory.

I’m demonstrating the use of OLAM instead of the Red Hat’s Ansible Automation Platform or upstream AWX because I’ve had recent experience deploying Oracle Linux Automation Manager in an Enterprise environment. The most recent version of OLAM as of this writing is version 2 which is based on the Ansible AWX version 19. The newer versions of AAP that Red Hat provides, and the community AWX version are both installed with Kubernetes or OpenShift, which I don’t want to worry about for the purposes of this article. OLAMv2 is installable by RPM packages with DNF, however it still uses the newer Ansible Automation Platform architecture. I really want to dig into the underlying components such as Receptor and the Execution Environments, and I feel like this is the least complex path for my purposes.

This will also give you a good platform to get familiar with AAP without the complexity of setting up Kubernetes or managing containers. As much as I love Kubernetes, Containers and OpenShift, I think it’s important to remember that underneath container platforms is still Linux, and knowing how to work with Linux is an important skill.

This is a really great platform to get familiar with. You can really expand your Ansible deployments with a lot of flexibility using OLAM or AWX in general.

Oracle provide access to Automation Manager directly in their Yum repositories for Oracle Linux 8 which makes installation really simple, particularly if you already run Oracle Enterprise Linux or have a non-RHEL environment.

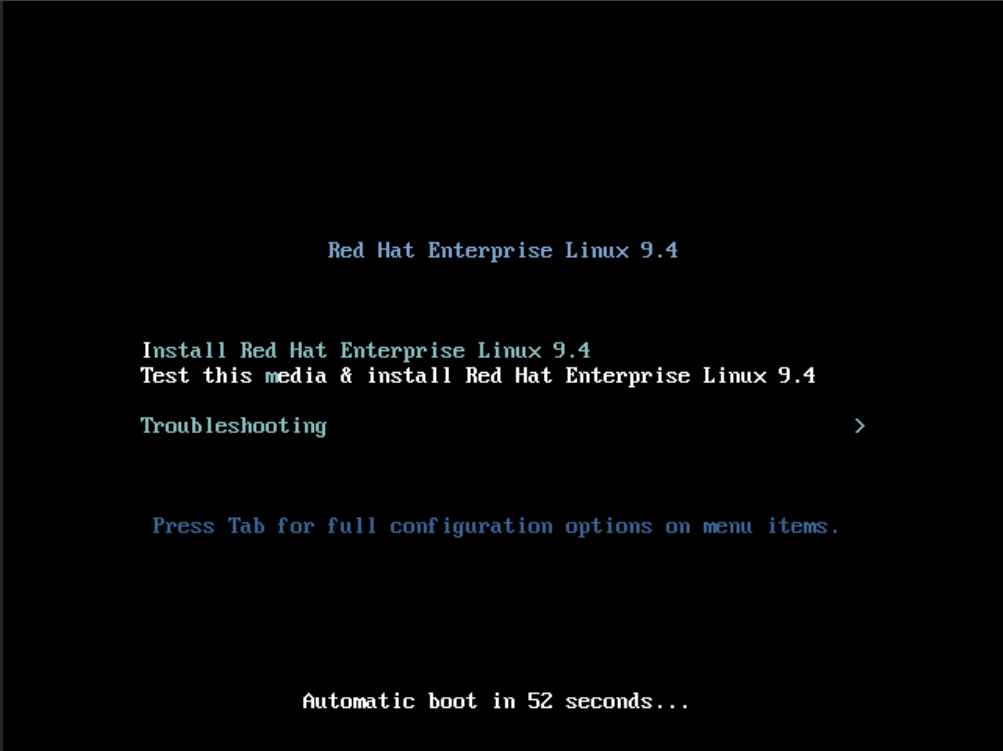

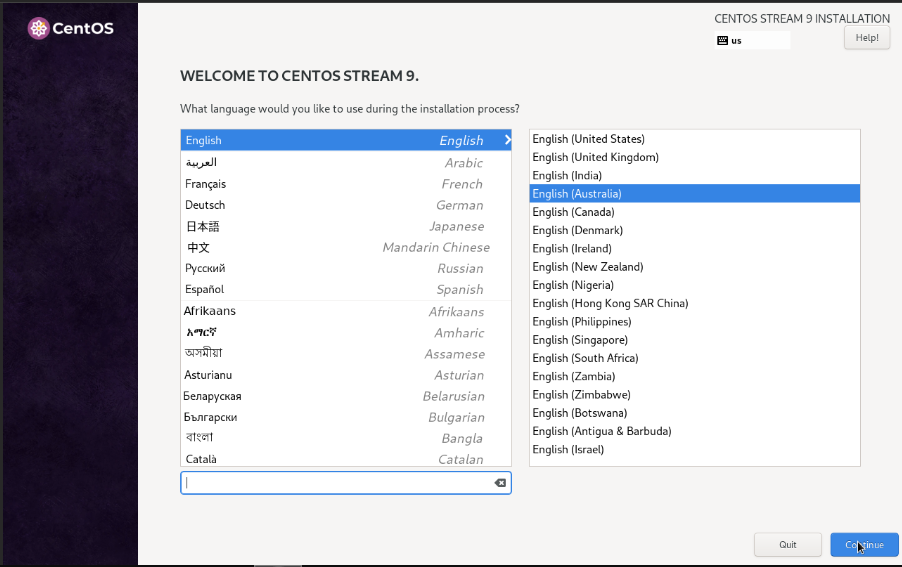

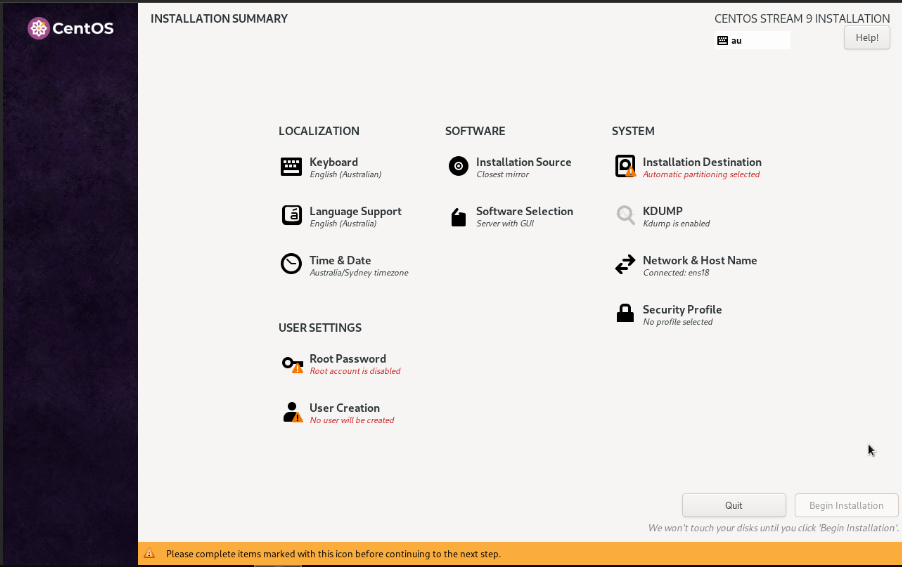

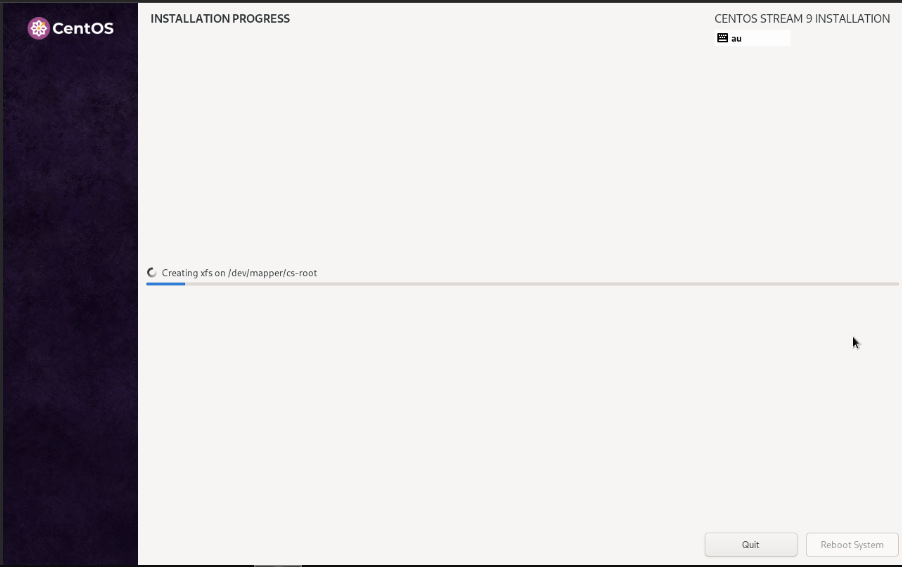

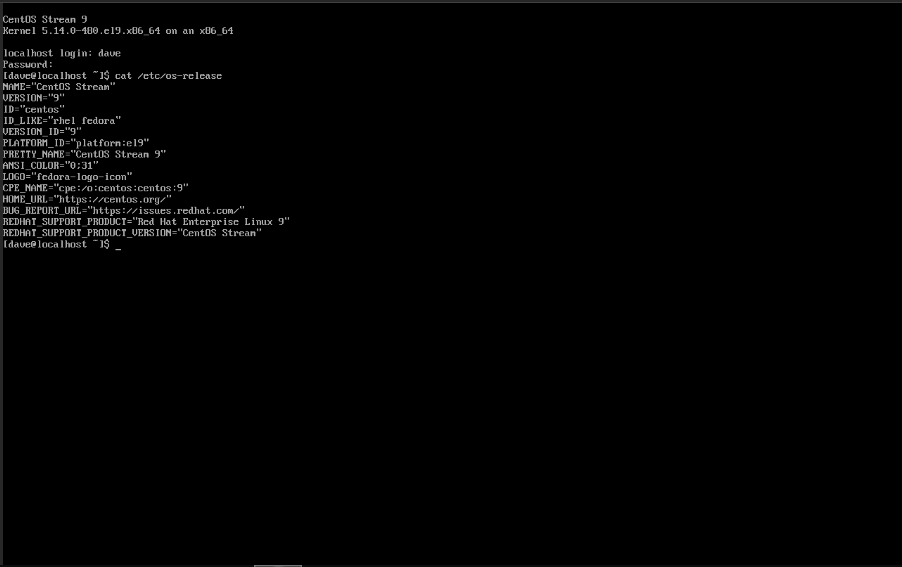

In this post I’ll install OL Automation Manager onto an Oracle Linux 8 virtual machine running in Proxmox. I won’t detail getting Oracle Linux installed as I’ve already done a post about RHEL and CentOS, and the installation steps are the same. I’ll install OLAM onto a single virtual machine rather than a cluster as it’s just for my own testing environment, however in a production environment you should use multiple machines.

Once Oracle Linux has been setup you can start the installation of Oracle Linux Automation Manager. First we have to enable the Automation Manager 2 repository.

$ sudo dnf install oraclelinux-automation-manager-release-el8Next we need to enable the postgresql database. I’m going to use Postgresql 13.

$ sudo dnf module reset postgresql

$ sudo dnf module enable postgresql:13

$ sudo dnf install postgresql-server

$ sudo postgresql-setup --initdb

$ sudo sed -i "s/#password_encryption.*/password_encryption = scram-sha-256/" /var/lib/pgsql/data/postgresql.conf

$ sudo systemctl enable --now postgresqlNext, set up the AWX user in postgresql.

$ sudo su - postgres -c "createuser -S -P awx"Enter the password when prompted then create the awx database.

$ sudo su - postgres -c "createdb -O awx awx"Open the file /var/lib/pgsql/data/pg_hba.conf and add the following

host all all 0.0.0.0/0 scram-sha-256In the file /var/lib/pgsql/data/postgresql.conf uncomment the “listen_addresses = ‘localhost'” line.

Now that the database is ready, we can install Automation Manager using DNF.

$ sudo dnf install ol-automation-managerThat should only take a moment. Next you’ll need to edit the file /etc/redis.conf and add the following two lines at the bottom of the file.

unixsocket /var/run/redis/redis.sock

unixsocketperm 775Next edit the file /etc/tower/settings.py. If you’re installing in a cluster configuration you’ll need to make a couple of extra changes, but for this single host installation the only change we need to make is the database configuration settings. Add the password you created earlier when creating the awx user is postgresql and set the host to ‘localhost’.

Now we’ll change users to the awx user to run the next part of the installation.

$ sudo su -l awx -s /bin/bash

$ podman system migrate

$ podman pull container-registry.oracle.com/oracle_linux_automation_manager/olam-ee:latest

$ awx-manage migrate

$ awx-manage createsuperuser --username admin --email [email protected]After running the createsuperuser command you’ll be asked to create a password. This is the username and password to login to the web ui, so don’t forget it.

Next generate an SSL certificate so you can access Automation Manager over HTTPS.

$ sudo openssl req -x509 -nodes -days 365 -newkey rsa:2048 -keyout /etc/tower/tower.key -out /etc/tower/tower.crtAnd replace the default /etc/nginx/nginx.conf configuration script with the this one.

Next we can start to provision the installation. Log back in as the awx user.

$ sudo su -l awx -s /bin/bash

$ awx-manage provision_instance --hostname=awx.local --node_type=hybrid

$ awx-manage register_default_execution_environments

$ awx-manage register_queue --queuename=default --hostnames=awx.local

$ awx-manage register_queue --queuename=controlplane --hostnames=awx.localChange the hostname(s) to whatever suits your environment. I used awx.local for the purposes of this demonstration. You can now type exit to leave the awx user session and go back to the rest of the setup as your normal user.

Replace the /etc/receptor/receptor.conf file with this one.

You can now start OL Automation Manager.

$ sudo systemctl enable --now ol-automation-manager.serviceNow we can preload some data.

$ sudo su -l awx -s /bin/bash

$ awx-manage create_preload_dataFinally, we’ll open up the firewall to allow access.

$ sudo firewall-cmd --add-service=https --permanent

$ sudo firewall-cmd --add-service=http --permanent

$ sudo firewall-cmd --reloadYou should be able to load up the browser and access the Web UI.

Login using the admin credentials you created during the setup process.